The Secret History Of Deepseek Ai News

페이지 정보

본문

Indeed, following the launch of DeepSeek-R1, Chinese tech titans Tencent, Alibaba and ByteDance introduced LLMs of their own, with both Tencent and Alibaba claiming that their models surpassed the capabilities of DeepSeek-R1. Testing AI's Trading Analysis Capabilities! Yesterday, Artificial Analysis ran an update to include a new offering from Groq that overtook Cerebras. Yesterday, Groq overtook Cerebras at the top with a brand new offering. In a demonstration of the effectivity beneficial properties, Cerebras mentioned its version of DeepSeek took 1.5 seconds to complete a coding process that took OpenAI's o1-mini 22 seconds. Whereas answers can take minutes to finish on different hardware, Cerebras said that its version of DeepSeek knocked out some coding tasks in as little as 1.5 seconds. Still enjoying hooky from "Build a large Language Model (from Scratch)" -- I used to be on our support rota in the present day and felt a little bit drained afterwards, so decided to complete off my AI chatroom. When people attempt to practice such a big language mannequin, they gather a big quantity of information on-line and use it to train these models. Groq, in the meantime, makes chips tailor-made for giant language models. Meanwhile, Google made its Gemini 2.0 Flash Thinking Experimental AI mannequin out there to all Gemini app customers last week.

OpenAI educated the model using a supercomputing infrastructure provided by Microsoft Azure, dealing with giant-scale AI workloads effectively. Since OpenAI previewed o1 last year, the corporate has moved on to its next model, o3. The company additionally acquired and maintained a cluster of 50,000 Nvidia H800s, which is a slowed model of the H100 chip (one generation prior to the Blackwell) for the Chinese market. 2.47%) H800 chips - the decreased-capability version of Nvidia’s H100 chips used by U.S. The assumption beforehand was that you simply need tons and tons, you already know, tens if not hundreds of thousands and thousands of dollars spent on access to chips in order to achieve this type of frontier of AI efficiency. AI is every company's focus right now, significantly in know-how, where business leaders are spending tens of billions of dollars constructing out knowledge centers and buying advanced chips to develop more highly effective models. For an analogous value, the wafer-scale chips spit out some 1,500 tokens per second, in comparison with 536 and 235 for SambaNova and Groq, respectively. On the hardware facet, those gains are being matched by Nvidia, but additionally by chip startups, like Cerebras and Groq, that can outperform on inference. Cerebras Systems makes big pc chips-the size of dinner plates-with a radical design.

OpenAI educated the model using a supercomputing infrastructure provided by Microsoft Azure, dealing with giant-scale AI workloads effectively. Since OpenAI previewed o1 last year, the corporate has moved on to its next model, o3. The company additionally acquired and maintained a cluster of 50,000 Nvidia H800s, which is a slowed model of the H100 chip (one generation prior to the Blackwell) for the Chinese market. 2.47%) H800 chips - the decreased-capability version of Nvidia’s H100 chips used by U.S. The assumption beforehand was that you simply need tons and tons, you already know, tens if not hundreds of thousands and thousands of dollars spent on access to chips in order to achieve this type of frontier of AI efficiency. AI is every company's focus right now, significantly in know-how, where business leaders are spending tens of billions of dollars constructing out knowledge centers and buying advanced chips to develop more highly effective models. For an analogous value, the wafer-scale chips spit out some 1,500 tokens per second, in comparison with 536 and 235 for SambaNova and Groq, respectively. On the hardware facet, those gains are being matched by Nvidia, but additionally by chip startups, like Cerebras and Groq, that can outperform on inference. Cerebras Systems makes big pc chips-the size of dinner plates-with a radical design.

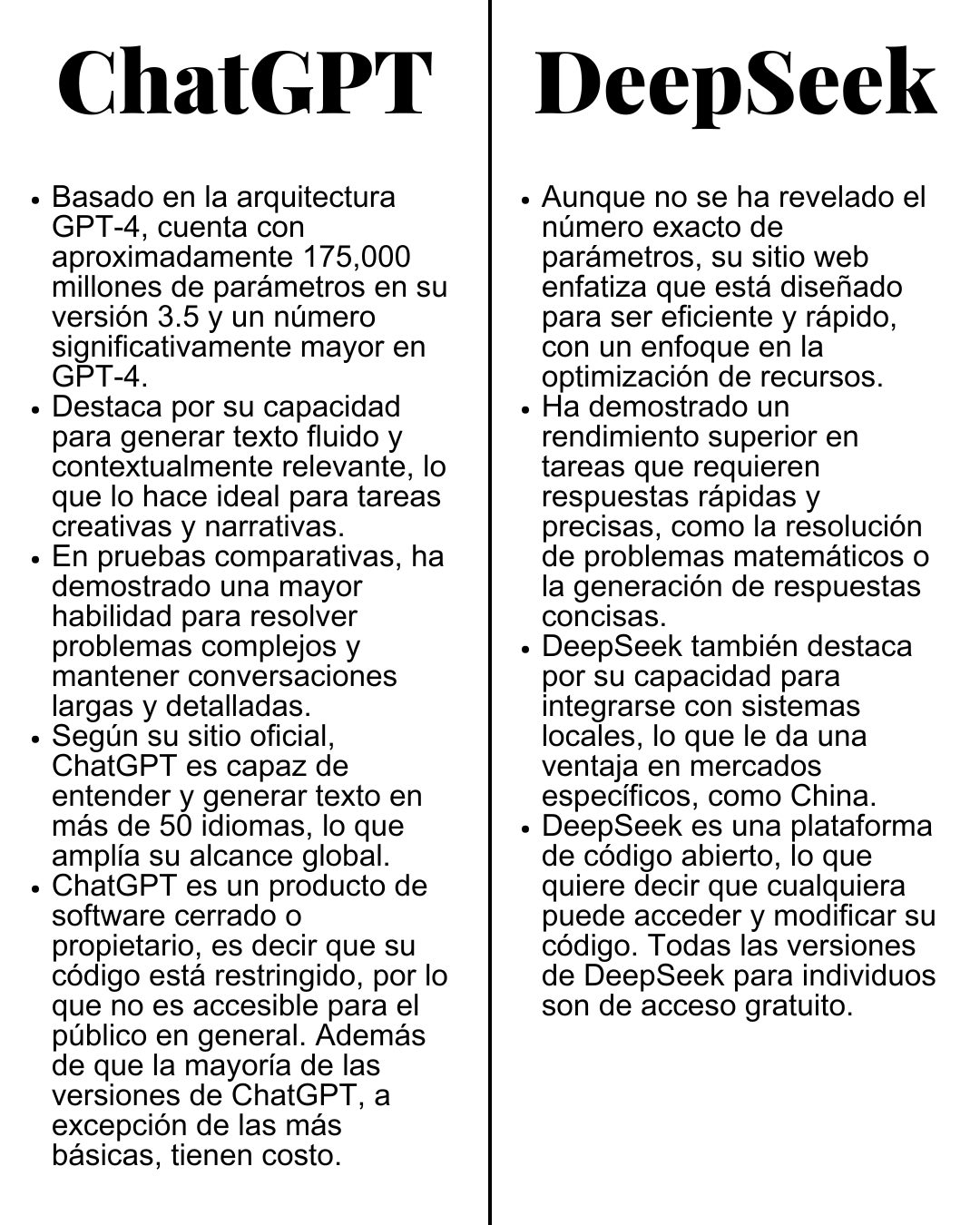

Now, two pc chip startups are drafting on those vibes. Two databases were uncovered with over a million traces of log streams containing chat historical past, API Keys, backend particulars, and different highly delicate info was uncovered. DeepSeek’s troubles continue with the leaking of delicate data belonging to over a million users. Not only was R1 cheaper to practice-allegedly just $6 million (though what this quantity means is disputed)-it is low-cost to run, and its weights and engineering particulars are open. Details on Copilot, Gemini, and Notebook LM. DeepSeek’s model appears to run at much lower price and consumes a lot less power than its American friends. There were additionally huge drops for Dutch chip-equipment maker ASML and AI hardware producer Siemens Energy. There is a conceivable argument that truthful use would apply to OpenAI and never DeepSeek if OpenAI’s use of the info was found to be "transformative," or completely different enough to negate infringement, and DeepSeek’s use of ChatGPT was not. So, there isn't a distinct answer for which one is the most effective. So what's that, if anyone hasn’t used it, and how do people best use that? Google DeepMind chief executive Demis Hassabis mentioned the Hangzhou-based startup’s AI mannequin "is probably the most effective work" from China, and is "an impressive piece of work," throughout a Google event in Paris, CNBC reported.

Now, two pc chip startups are drafting on those vibes. Two databases were uncovered with over a million traces of log streams containing chat historical past, API Keys, backend particulars, and different highly delicate info was uncovered. DeepSeek’s troubles continue with the leaking of delicate data belonging to over a million users. Not only was R1 cheaper to practice-allegedly just $6 million (though what this quantity means is disputed)-it is low-cost to run, and its weights and engineering particulars are open. Details on Copilot, Gemini, and Notebook LM. DeepSeek’s model appears to run at much lower price and consumes a lot less power than its American friends. There were additionally huge drops for Dutch chip-equipment maker ASML and AI hardware producer Siemens Energy. There is a conceivable argument that truthful use would apply to OpenAI and never DeepSeek if OpenAI’s use of the info was found to be "transformative," or completely different enough to negate infringement, and DeepSeek’s use of ChatGPT was not. So, there isn't a distinct answer for which one is the most effective. So what's that, if anyone hasn’t used it, and how do people best use that? Google DeepMind chief executive Demis Hassabis mentioned the Hangzhou-based startup’s AI mannequin "is probably the most effective work" from China, and is "an impressive piece of work," throughout a Google event in Paris, CNBC reported.

Hassabis stated DeepSeek has demonstrated "extremely good engineering," and that its AI fashions have deeper geopolitical implications. However, Hassabis stated DeepSeek doesn’t present "actual new scientific advance" and is "using recognized techniques" within the AI industry. Free DeepSeek online shot to the top of the charts in reputation final week, but its fashions are hosted on servers in China, and specialists have since raised considerations about safety and privacy. DeepSeek’s cheaper-but-competitive fashions have raised questions over Big Tech’s massive spending on AI infrastructure, as well as how effective U.S. The leak was found when researchers accessed a public database belonging to DeepSeek which allowed full control over database operations - including the ability to entry internal knowledge. Move Over Smart Rings. Bad move by me, as I, the human, am not nearly smart sufficient to verify and even totally perceive any of the three sentences. Its potential to generate coherent sentences flawlessly baffled users world wide. Additionally, the judgment skill of DeepSeek-V3 will also be enhanced by the voting approach. Whether you want formal, concise responses or a laid-back, conversational tone, you can tailor the AI to match your style. This bias is often a reflection of human biases present in the information used to practice AI fashions, and researchers have put much effort into "AI alignment," the strategy of trying to eliminate bias and align AI responses with human intent.

- 이전글Pertolongan Pemenuhan Online Luar Kawasan Dengan PayPal Murah 24 Tanda Waktu 25.03.23

- 다음글台北房屋貸款? It is easy For those who Do It Good 25.03.22

댓글목록

등록된 댓글이 없습니다.